AI composition

recognition competition

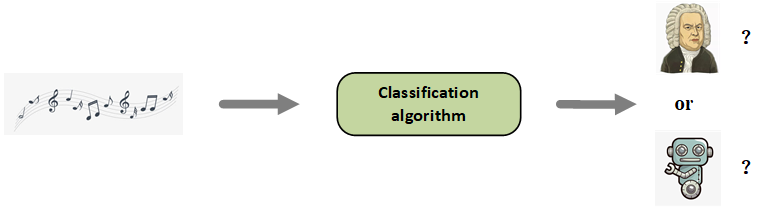

This data challenge aims to identify music melodies generated by artificial intelligence algorithms. The challenge provides a development dataset that contains melodies in two different music style and generated by a number of music generation algorithms. A month later, an evaluation dataset will be released. The final ranking of the challenge is determined by the AUC of judging the source of the melody in the evaluation dataset.

There is no enrolment process in this data challenge. Any participants following the guidance described in this page with a successful submission will be considered a participant. The participant is expected to use the developed algorithms to label how melodies in the evaluation dataset are generated. The submission should include a technical report on the developed algorithm and a CSV file containing labelling information, and should be submitted via the submission system of Conference on Sound and Music Technology (CSMT) at the following page: (https://cmt3.research.microsoft.com/CSMT2020).

The technical report should follow the requirement of manuscript of CSMT (as a bilingual conference, both Chinese and English technical report is acceptable) and should be uploaded to arXiv before the deadline of challenge submission. When the result is submitted, please make sure the track of the system is selected as “Data Challenge”.

Participants need to submit source code for algorithm validation (an NDA could be signed if necessary). The participants would be disqualified if they refuse to submit source code upon request. Any program language is allowed. The complete code should include: function for reading the evaluation dataset, main algorithms to generate system output, and listing the packages used in the code.

Participants are not allowed to make subjective judgments of the evaluation data, which means label the music by the human musician. The participants would be disqualified if making subjective judgments

Development dataset

The development dataset contains 6000 MIDI files with monophonic melodies generated by artificial intelligence algorithms. The tempo is between the 68bpm and 118bpm (beat per minute). The length of each melody is 8 bars, and the melody does not necessarily include complete phrase structures. There are two datasets with different music styles used as the training dataset of a number of algorithms, where the melodies in the development dataset are generated. The MIDI files in the development dataset are named in the following format.

development-id.mid

Evaluation dataset

The evaluation dataset contains 4000 MIDI files with exact configurations of development dataset with two exceptions: 1) A number of melodies composed by human composers are added, some of which are published and some of which are composed for this competition. The music style of the human composed melodies are the same as the styles of music in the training set. This was confirmed by musicologists. 2) There are a number of melodies generated by algorithms with minor algorithmic or parameter changes compared to the algorithms in the development dataset.

test-id.mid

The final ranking of the challenge is determined by the AUC of judging the source of the melody in the evaluation dataset.

Data download

Use of external data is allowed under the following conditions:

• Only open source data that can be referenced is allowed. External data refers to public datasets or pre-trained models.

• The data must be public and freely available before 15th August 2020.

• The list of external data sources used in training must be properly cited and referenced in the technical report.

Please submit one ZIP file ONLY including an technical report (following instruction of CSMT submissions) and a sub ZIP file containing source code.

• Technical report explaining the method in sufficient detail(*.pdf), The report needs to be anonymous.

• A sub ZIP file includes: 1. A complete system code that can be run; 2. System output file (*.csv), System output should be presented as a single text-file (in CSV format, with a header row) containing the likelihood of the melody being human or machine composed (float type) for each midi file in the evaluation set. The higher score, the music is more likely composed by AI, the lower the score, the music is more likely composed by human.

NOTICE: Multiple CSV files, effectively multiple attempts, are allowed in a single ZIP file but the attached source code should be able to produce all CSV files.

file_name score

0.mid 0.8

1.mid 0.25

2.mid 0.6

3.mid 0.1

To avoid overlapping labels among all submitted systems, use following way to form your label:

[first name]_[last name]_[Abbreviation of institute of the corresponding author]

For example the systems would have the following labels:

• Hua_Li_BUPT

• Michael_Jordan_UNC

Make sure your zip-package follows provided file naming convention and directory structure. The zip package example can be downloaded here.

Hua_Li_BUPT.zip Zip-package root, Task submissions

│

└───Hua_Li_BUPT_technical_report.pdf Technical report

│

│

└───output_code.zip

Hua_Li_BUPT_code_1 Task System code

(Any language is allowed)

Hua_Li_BUPT_output_1.csv Task System output

Hua_Li_BUPT_code_2 Task System code

Hua_Li_BUPT_output_2.csv Task System output

The baseline system is an AutoEncoder. The encoder and the decoder employ four fully connected layers respectively. In the training process, the AutoEncoder is trained using AI generated music, in that case, the decoder learns how to generate outputs following the distribution of the AI generated music. In the inference process, the AI generated music and the human composed music get their outputs through the trained AutoEncoder, the reconstruction error of the AI generated music will be lower than the reconstruction error of the human composed, due to the training process above.

Contact us: Jing Yinji, challenge coordinator, Beijing University of Posts and Telecommunications, mail: jyj@bupt.edu.cn

Do I need to classify styles?

There is no need to classify styles.

This challenge only asks participants to judge whether the

music is composed by human or AI,

but we would like to remind the participants that there are different styles in the dataset.